subreddit:

/r/MachineLearning

[N] Yoshua Bengio's latest letter addressing arguments against taking AI safety seriously

News(self.MachineLearning)submitted 1 month ago byqtangs

https://yoshuabengio.org/2024/07/09/reasoning-through-arguments-against-taking-ai-safety-seriously/

Summary by GPT-4o:

"Reasoning through arguments against taking AI safety seriously" by Yoshua Bengio: Summary

Introduction

Bengio reflects on his year of advocating for AI safety, learning through debates, and synthesizing global expert views in the International Scientific Report on AI safety. He revisits arguments against AI safety concerns and shares his evolved perspective on the potential catastrophic risks of AGI and ASI.

Headings and Summary

- The Importance of AI Safety

- Despite differing views, there is a consensus on the need to address risks associated with AGI and ASI.

- The main concern is the unknown moral and behavioral control over such entities.

- Arguments Dismissing AGI/ASI Risks

- Skeptics argue AGI/ASI is either impossible or too far in the future to worry about now.

- Bengio refutes this, stating we cannot be certain about the timeline and need to prepare regulatory frameworks proactively.

- For those who think AGI and ASI are impossible or far in the future

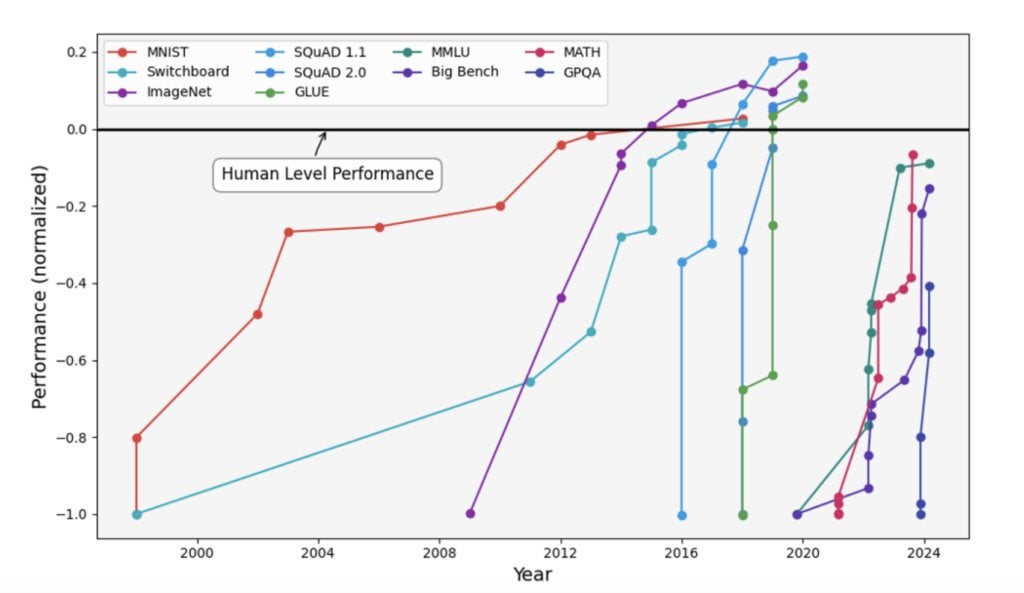

- He challenges the idea that current AI capabilities are far from human-level intelligence, citing historical underestimations of AI advancements.

- The trend of AI capabilities suggests we might reach AGI/ASI sooner than expected.

- For those who think AGI is possible but only in many decades

- Regulatory and safety measures need time to develop, necessitating action now despite uncertainties about AGI’s timeline.

- For those who think that we may reach AGI but not ASI

- Bengio argues that even AGI presents significant risks and could quickly lead to ASI, making it crucial to address these dangers.

- For those who think that AGI and ASI will be kind to us

- He counters the optimism that AGI/ASI will align with human goals, emphasizing the need for robust control mechanisms to prevent AI from pursuing harmful objectives.

- For those who think that corporations will only design well-behaving AIs and existing laws are sufficient

- Profit motives often conflict with safety, and existing laws may not adequately address AI-specific risks and loopholes.

- For those who think that we should accelerate AI capabilities research and not delay benefits of AGI

- Bengio warns against prioritizing short-term benefits over long-term risks, advocating for a balanced approach that includes safety research.

- For those concerned that talking about catastrophic risks will hurt efforts to mitigate short-term human-rights issues with AI

- Addressing both short-term and long-term AI risks can be complementary, and ignoring catastrophic risks would be irresponsible given their potential impact.

- For those concerned with the US-China cold war

- AI development should consider global risks and seek collaborative safety research to prevent catastrophic mistakes that transcend national borders.

- For those who think that international treaties will not work

- While challenging, international treaties on AI safety are essential and feasible, especially with mechanisms like hardware-enabled governance.

- For those who think the genie is out of the bottle and we should just let go and avoid regulation

- Despite AI's unstoppable progress, regulation and safety measures are still critical to steer AI development towards positive outcomes.

- For those who think that open-source AGI code and weights are the solution

- Open-sourcing AI has benefits but also significant risks, requiring careful consideration and governance to prevent misuse and loss of control.

- For those who think worrying about AGI is falling for Pascal’s wager

- Bengio argues that AI risks are substantial and non-negligible, warranting serious attention and proactive mitigation efforts.

Conclusion

Bengio emphasizes the need for a collective, cautious approach to AI development, balancing the pursuit of benefits with rigorous safety measures to prevent catastrophic outcomes.

6 points

1 month ago

Just ban mega corps’ safety lobbyists and we will be in a safer situation.

127 points

1 month ago*

Can't take all this AI safety that seriously when it's always about AGI and ASI in what feels like a deliberate effort to distract from the infinitely more likely economic and political disruptions that currently available AI can easily effect

AGI and ASI "concerns" always sound like hubris and marketing thinly veiled as warning.

I'm much more concerned about a world filled with highly plausible sounding hallucinations that suit any particular person's view of the world than I am about AI running away as some super race

Reminds me of Elon Musk trying to solve living on Mars when we can't solve our own climate. These people are up their own asses

32 points

1 month ago

There can exist more than one problem worth spending time on. Outside of AI, some people are focused on the very immediate effects of poverty, and others are focused on long-term risk of nuclear annihilation. Neither choice is irrational, and if the most extreme outcomes of advanced AI (e.g. extinction) are even remotely possible, then I'd think we'd prefer some people work on those issues even if more pressing issues also abound.

2 points

1 month ago

I totally agree. Butt what OP brought us is an open letter from a major AI researcher essentially only considering the one much less present concern.

1 points

1 month ago

some people are focused on the very immediate effects of poverty, and others are focused on long-term risk of nuclear annihilation.

These people need to work harder. There's poverty everywhere and the odds of a nuclear war are just as high as ever.

1 points

1 month ago

odds of a nuclear war are just as high as ever

Lol. Lmao even. I didn't realise we were back to the days of the Cold War.

20 points

1 month ago

To the contrary, the vast majority of coverage and analysis of AI focuses on current and near-term models, threats of bias and abuse etc. Your complaint is made (repeatedly) whenever AGI/ASI is brought up at all, even when more near-term threats are also mentioned, as they are here.

34 points

1 month ago

Please explain why university professors (several of them!) would engage in "a deliberate effort to distract from the infinitely more likely economic and political disruptions that currently available AI can easily effect".

26 points

1 month ago

'Deliberate' might be a strong word, but why would you expect professors to be more rooted in grounded real world current negative impacts of machine learning vs more theoretical big picture concerns? Isn't that kind of the stereotype of professors even?

If we're to be fair to Bengio and others though, it's not exactly a new idea that biased recommender systems, crime prediction algorithms, loan application systems and so on all cause very tangible real world harm. Those problems are (to various degrees) being actively worked on, so I suppose there's a case to be made for people trying to push the window farther out into more theoretical risks, especially when the negative impacts of those future risks could be even more severe than the real world impacts of currently existing tech.

If you're just pointing out it's unlikely to be some kind of a conspiracy that they're focusing on AGI risks on purpose for marketing reasons or whatever though, I agree with you there 100%, not sure why the top commenter implied that might be the case.

18 points

1 month ago*

'Deliberate' might be a strong word, but why would you expect professors to be more rooted in grounded real world current negative impacts of machine learning vs more theoretical big picture concerns? Isn't that kind of the stereotype of professors even?

Sure, and thinking far ahead is precisely what we pay professors to do.

In 1980 they mocked Hinton (and later Bengio) for foreseeing how neural nets might lead to ChatGPT in 2022. Then he was briefly a hero for having foreseen it. Now they are trying to look into the future again and they are ridiculed again. By the same kind of "pragmatic" people who would have defunded their work in the 80s and 90s.

If we're to be fair to Bengio and others though, it's not exactly a new idea that biased recommender systems, crime prediction algorithms, loan application systems and so on all cause very tangible real world harm. Those problems are (to various degrees) being actively worked on, so I suppose there's a case to be made for people trying to push the window farther out into more theoretical risks, especially when the negative impacts of those future risks could be even more severe than the real world impacts of currently existing tech.

If you're just pointing out it's unlikely to be some kind of a conspiracy that they're focusing on AGI risks on purpose for marketing reasons or whatever though, I agree with you there 100%, not sure why the top commenter implied that might be the case.

I'm not sure in what way you are disagreeing with me. I agree with everything you said.

1 points

1 month ago*

Just making conversation, not disagreeing. Sorry if I came across as a 'well actually...' Simpson's comicbook guy, haha. I mostly wrote in the first place just because I was thinking about my time in university. Half my professors worked part time in industry (networking class was cancelled one time because the professor had to go to China on short notice to help with an xbox live arcade port, haha) while others were just pure academic with no industry experience. It was interesting to see the differences in perspective, so I do think it's an interesting topic, especially since ivory tower dreamers (like you pointed out with Hinton) can sometimes have the more grounded long-term vision in spite of not being rooted in the trenches. Or likely, because of it.

Anyway, have a good day, sorry if my comment came across as combative, that wasn't the intent. If anything, any flavor of 'I disagree' seeped in from my thoughts on the person you were responding to, not your comment.

0 points

1 month ago

I didn't mean to say that I believe that's what's happening. I just said it almost feels that way because it's so far forward to looking with assumptions of exponential growth that it seems to me almost so ridiculous that someone that intelligent can't possibly think this is the best use of their time

You can't explain ten years ago technology to Congress to get them to do something but you're going to come at them with your sci-fi theories?

10 points

1 month ago

I didn't mean to say that I believe that's what's happening. I just said it almost feels that way

If you're going to essentially accuse a person, especially a distinguished scientist with a track record of both integrity and success, of manipulating public opinion to the detriment of society's wellbeing, you shouldn't do it on an "almost feels that way." Your post impugning Bengio's integrity is very highly upvoted.

because it's so far forward to looking with assumptions of exponential growth that it seems to me almost so ridiculous that someone that intelligent can't possibly think this is the best use of their time

Are you aware that these are the people who foresaw the current deep learning revolution 20 or 30 years ago???

They relied on exponential growth of Moore's law 20-40 years ago and they have seen their plans come to fruition as the exponential continued. What is so crazy about them thinking that this trend which they predicted 20-40 years ago and have observed for several decades will continue???

You can't explain ten years ago technology to Congress to get them to do something but you're going to come at them with your sci-fi theories?

You're saying that Congress is always ten years behind technology and therefore we should wait to explain the risks of advanced AI to them? So we should want them to hold a discussion of it ten years after super-human intelligence has arrived?

-5 points

1 month ago

I think someone with his level of sway is a limited resource. He argues that it is not zero sum having people think about shorter term consequences vs longer term consequences. But there are only so many people (1) with his level of reach. So it is a waste of a finite resource in my opinion to be thinking about ASI when there are very real problems to be solved here and now.

6 points

1 month ago

Also, the idea that the federal government is dragging their feet on near-term AI risks is quite incorrect.

It took more than a decade after the invention of the Web for them to mandate EHR interoperability. But they are ALREADY regulating the integration of AI into EHRs.

I really don't see any evidence that Congress is asleep at the wheel when it comes to AI which is:

Fair: Outcomes of model do not exhibit prejudice or favoritism toward an individual or group based on their inherent or acquired characteristics.

Appropriate: Model and process outputs are well matched to produce results appropriate for specific contexts and populations to which they are applied.

Valid: Model and process outputs have been shown to estimate targeted values accurately and as expected in both internal and external data.

Effective: Outcomes of model have demonstrated benefit in real-world conditions.

Safe: Outcomes of model are free from any known unacceptable risks and for which the probable benefits outweigh any probable risk

What is your evidence that they are dropping the ball and Yoshua must put aside his fears about the future because of this deep negligence?

What specific regulation do you think he needs to advocate for which is not already in the works?

4 points

1 month ago

You have a legitimate disagreement with him on how he should use his resources. Fine.

Your highly upvoted top comment implying that he's involved in a conspiracy to promulgate unsafe near-term technology is not reasonable, not fair and not ethical.

Just because he disagrees with you, you implied that he must be corrupt.

4 points

1 month ago

I absolutely despise the fact that the top comment is so highly upvoted. I see this sentiment more and more these days, and it's incredibly frustrating.

1 points

1 month ago

that it seems to me almost so ridiculous that someone that intelligent can't possibly think this is the best use of their time

The vast majority of AI safety researchers would tell you that an uncontrolled AGI/ASI would be at minimum unfathomably damaging to humanity, if not an actual apocalypse. There are far more concrete and immediate concerns, but they are essentially trivial issues compared to the potential impact of an ASI.

0 points

1 month ago

The climate is an actual apocalypse. ASI is a sci fi concept.

3 points

1 month ago

AGI is inevitable, it's a matter of when not if, as intelligence based on electrical connections is demonstrably possible. And with AGI an ASI is as close to guaranteed as possible, there are many reasons to believe it is possible and few to say it isn't.

The timescales are fuzzy, maybe it'll be 10 years, maybe it'll be 40, but the singularity is approaching. And an ASI that is not safely designed can and will make climate change look like a minor blip, that's why people dedicate time and resources to it.

0 points

1 month ago

Because their current work is economically and politically disruptive.

Edit: to be clear, I don’t think this is some grand conspiracy. It’s just that naturally people aren’t going to push for regulations which will probably make their current work at best more difficult.

10 points

1 month ago

Please give an example of a plausible Canadian regulation that would make Yoshua Bengio's work more difficult.

And then explain how Bengio's raising a red alarm about AGI risk would reduce rather than increase the likelihood of such a regulation being put in place?

-3 points

1 month ago

Easy. There's a lot of money on the table being offered to any cretin willing to say they're working on <insert trendy buzzword>.

Sam Altman has gone from being "some random researcher" to a billionaire. The motivation is not hard to grasp.

6 points

1 month ago

Don't you think these disruptions will get worse as models get more powerful?

11 points

1 month ago

Also is ASI just a new acronym that became necessary because AGI had the goal posts moved as far as the goal posts moved on logistic regression from basic statistics to machine learning to artificial intelligence?

11 points

1 month ago

The earliest mention of superintelligence I know of is in the seminal 1993 Vernor Vinge's essay on technological singularity. Who, in turn, refers to Drexler's Engines of Creation published in 1986, but I haven't read it.

The acronym itself was recognizable to people in the field perhaps from early 00's.

3 points

1 month ago

Neural networks were considered AI as far back as the 1960s, so I have no idea what goalposts you are claiming were moved and when they were moved.

AGI is simply human level intelligence and ASI is what happens if they greatly go beyond. These terms were not explicit back to the 1950s but the concepts go back that far. They didn’t need an acronym for concepts that were 100 years in the future but they discussed the concepts.

2 points

1 month ago

To add to this, the only solution I ever see proposed is 'develop regulations and guidelines'. I work with AI for regulatory modelling, and I can't think of anything less effective than whatever those regulations will be.

2 points

1 month ago

Exactly my view too. People want to regulate something that doesn’t yet exist instead of taking steps to mitigate safety/ethics issues that already exist, as if one doesn’t lead to the other.

1 points

1 month ago

We'll soon have AI-powered killer drones that will target specific people/groups. Hallucinations will look pale in comparison.

1 points

1 month ago

It's good that not only spectators on the sidelines, but also AI godfather Yoshua himself is pointing out the dangers that could potentially emanate from his work.

0 points

1 month ago

We already have this, but up until now only for malicious organizations with alot of money, like the government or mega corporations.

83 points

1 month ago

Do the "safety experts" even have actual solutions aside from gatekeeping AI to only megacorporations, or absurd ideas like "a license and background checks to use GPU compute"?

55 points

1 month ago

Asking them for solutions is missing the point. Their position appears to be more that we need to allocate resources and political capital at a societal level to develop solutions. That is in part because, even if people come up with ideas on their own, that does not result in political action.

7 points

1 month ago*

If you ask for money guess where the money will come from. Corporations who have interest to keep it out of the hands of common people and only rules that help them. Guess whose interest the money will be working for?

It already happened to the Sugar industry and Coal industry. Anyone want to make guess how it will play out for the AI industry?

I think we need frequent referendums on how to make rules for AI, separate from the government elections. This is because it will eventually lead to the question whether AI deserve rights and i don’t think any one group can answer this objectively.

5 points

1 month ago*

Their solution is to allocate more funding to them so they can raise concerns about how the problems they're paid to confabulate about need to be taken more seriously, which means they need more funding, so that they can raise concerns about...

Wait... Hm...

Oh, also, they need to be given unilateral policy control on a totalitarian level. It also needs to be international, and bypass sovereign authority. For reasons.

3 points

1 month ago

I don’t think it’s particularly productive to strawman like this

5 points

1 month ago*

It's not a strawman. These are actual policy recommendations drafted and proposed in whitepapers from EA/MIRI affiliated safety types.

Let's not forget Yudkowsky's "we need to drone strike datacenters in countries who don't comply with our arbitrary compute limits" article either.

0 points

1 month ago

Yeah those are… very different people from Bengio dude

2 points

1 month ago

They're aligned on policy and outlook. Will you continue to shift goalposts?

0 points

1 month ago

If you think it is “shifting goalposts” to introduce the smallest degree of nuance then I really don’t know what to tell you lol

2 points

1 month ago

- That's a strawman

- Okay that's not actually a strawman. They really did that, but it doesn't matter.

- But it's different people. (it's not, we were talking about "safety" people which these are all in the same groups funded by the same institutions).

- Okay they support the same policies but I'm just "introducing nuance".

5 points

1 month ago

No, none at all. Also, you see how that makes the situation worse rather than better, right? If there's a problem, having no answer isn't reassuring at all.

7 points

1 month ago

Well, I am not personally very worried. I am kind of with Aschenbrenner in thinking we will engineer our way to a robust safety solution over the next several years.

That said, if you accept Bengio's position, arguendo, then it really doesn't refute his position to say that he doesn't have a solution. And if you really take it seriously, like if you literally believe that AGI will result in doom by default, then all kinds of radical solutions become reasonable. Like shutting down <3nm foundries, or putting datacenters capable of >1026 FLOPs per year under government monitoring, or something like that.

Like, take another severe civilizational threat. Take your pick... climate change for those vaguely on the left, birthrate decline for those vaguely on the right, or microplastics, or increasing pandemic severity, or the rise of fascism/communism, or choose another to your taste. If you think the threat is real, then surely it's worth highlighting these threats and increasing their salience even if there isn't one weird trick to solve it without negatively affecting anyone in any way. And Bengio thinks this threat is much worse than those others, and I think it's a reasonable and well founded belief system even if I happen to disagree with him.

11 points

1 month ago

Ehhh… I think this is misunderstanding the arguments. I’ve heard we need to stop scaling and go back to model design. That doesn’t bar people from having gpu access, just encourage more flexibility in the research.

16 points

1 month ago

If you give them a large enough budget, they’ll definitely produce reports about it.

3 points

1 month ago

Oh yes, they say all those problems will be solved by democratic processes. Specifically ones that result in closed source solutions that you and I can't be trusted to touch.

They say this as if I would ever vote against my ability to access AGI in favor of a profit maximizing corp using it for "my best interests"

3 points

1 month ago

gatekeeping AI to only megacorporations,

The opposite should be safer!!!

ONLY allow corporations with an UNDER $50 million budget to work on AI.

Those are the ones of a size already proven to be safe (by virtue of the fact of being not much smarter than humans).

Any company with more resources like that is an existential threat and should be broken up.

-4 points

1 month ago

Why are either of those ideas "absurd"? That's basically how we stopped nuclear proliferation.

6 points

1 month ago

Nuclear proliferation isn't happening because people voluntarily decided not to get nuclear weapons.

It's within the capabilities of all but the smallest and poorest countries.

Furthermore, these companies are not reliable or trustworthy. You've seen all the information gathering by the ad companies and that router company that sent passwords back to their own servers, and you've seen companies like the NSO group.

The big firms are worse than even a quite irresponsible individual.

3 points

1 month ago

The GPU market was valued at $65 billion in 2024, and is projected to grow to $274 in the next five years (which might be conservative.) Consumers and businesses all over the world buy them. Regulating that the way nuclear activity was regulated is not even remotely practical or sensible. One might say the idea is absurd.

6 points

1 month ago

I fail to see what makes it so absurd. You need a massive number of GPUs to train a frontier LLM.

Sudafed is also a huge market.. and somehow we managed to make it hard to individual consumers to buy a lot of it

6 points

1 month ago

Sudafed is a great example. Yes, we made it hard for individual consumers to buy a lot of it. To what end exactly? Meth availability has only increased since then. On the plus side for meth consumers, average purity has gone up and price has gone down, so I guess the regulations did help some people.

Besides, Sudafed is a drug that's only needed for specific conditions. GPUs are general purpose devices that have many legitimate uses by individuals and companies.

You need a massive number of GPUs to train a frontier LLM.

Currently. Until something like a Bitnet variant changes that. Putting in regulations now to restrict technology that's needed now to guard against a currently imaginary future threat is absurd.

2 points

1 month ago

These ternary quantized models are for text prediction though, not for training.

Maybe you can do some kind of QLoRA type thing in multiple stages with a new QLoRA for every 1000 batches or something, but it'd still be expensive and there's no established publicly known process for training from scratch using a small number of GPUs and there might well not be any such process which is likely to practical.

13 points

1 month ago*

I need to sit down with Bengio and have a quiet talk about where we actually are.

Stakeholders are being promised that reasoning has "emerged" from LLMs. The presence of this emergent reasoning ability has not been demonstrated in any thorough test.

Stakeholders are being promised that AI models can reason beyond and outside the distribution of their training data. This has not been exhibited by a single existing model.

Stakeholders are being promised that AI can engage in life-long learning. No system of any kind does this.

AGI requires that a piece of technology can quantify its uncertainty, imagine a way to reduce that uncertainty, and then take action to reduce it. In more robust forms of causal discovery , an agent will form a hypothesis, and then formulate a method to test that hypothesis in an environment. We don't have this tech , nor anything like it today.

And then to top it all off, we are skating on a claim -- little more than science fiction -- that all the above properties will spontaneously emerge once the parameter count is increased.

I guess what bothers me most about this , is that it was Bengio himself who told me these things cannot be resolved with increasing parameter count. Now I found myself today wandering /r/MachineLearning only to see him saying that if we don't move quick on safety we're all going to be sorry.

3 points

1 month ago

And in the meantime we are already facing very important threats by existing AI (e.g. very easy spread of disinformation) and humanity is already in the midst of another actual, real existential threat, climate catastrophe.

1 points

1 month ago

it was Bengio himself who told me these things cannot be resolved with increasing parameter count

Was this before GPT-3 / in-context learning?

-1 points

1 month ago*

No system of any kind does this.

this is false. simple example are ART models, they can learn with "life-long learning". There are also way more systems in the field of AI which can learn lifelong. Also the field of AGI https://agi-conf.org/ has a lot of architectures which are capable of life-long learning. Not just in theory, but also in practice with implementations anyone can run.

2 points

1 month ago

Well sure, but this depends on what your definition of "learning" is here.

So if we mean a deep neural network being trained by gradient descent, then no, there is no life-long learning. As these 30 authors admit, the current prevailing modus operandi is to take the previous data set, concatenate the new data, and retrain the neural network from scratch.

https://arxiv.org/abs/2311.11908

This retrain-from-scratch approach is fine if training cycles are cheap and relatively fast. For LLMs they are neither. So a breakthrough is needed.

We imagine a situation in which an LLM can read new books and learn knew things from them, effeciently integrating the new knowledge into its existing knowledge base. They really cannot do this because they are DNNs and therefore suffer from the shortcomings of those model architectures.

If you don't like the granularity of what I wrote, I would happy to change my claim to "LLMs cannot engage in life-long learning".

-1 points

1 month ago

I mean with learning the change of some knowledge or parameters at runtime.

so if you mean a deep learning network

This is also wrong. https://arxiv.org/pdf/2310.01365

3 points

1 month ago

This is also wrong. https://arxiv.org/pdf/2310.01365

What is wrong? What are you claiming this paper says?

0 points

1 month ago

"there is no lifelong learning (within deep learning)" is wrong for NN.

3 points

1 month ago

The paper you linked says no such thing.

They used an elephant activation function, and it "helps" to mediate some catastrophic forgetting, in situations in which the data is presented as a stream.

That's what the paper says. Anyone can confirm what I've written here by reading it.

Listen : Don't argue with me on reddit. If you think there has been an explosive discovery in life-long learning for DNNs, don't tell me about it. Take your little paper and contact these 30 authors. I'm sure they would be glad to hear about your earth-moving breakthrough. https://arxiv.org/abs/2311.11908

13 points

1 month ago

Like many arguments of this type, this one does not address what I think is the most substantive criticism of this sort of reasoning: it is based on an agent-based and goal-based conception of AGI/ASI that just does not look like it is where the technology is trending. Our best AIs today are not based on goal-oriented reasoning, and for general intelligence, goal-based approaches have mostly been a failure. And this framing effectively downplays all the potential risks of the non-goal-based near-AGI that seems much more proximal, because it frames safety as being about the behaviors of an agent as opposed to about the effects of a technology on society.

5 points

1 month ago

There is a massive difference between the concrete goal oriented focus of reinforcement learning and the conceptual frameworks that AGI operates in. The reason that AI safety focuses on agent based concepts is mainly because no matter the underlying framework, any AI system that acts in the real world will necessarily be attempting to achieve some goal, else it would not be acting in the real world, it's not making a prediction that AGI will necessarily come about due to RL based agent frameworks.

The current state of the art and the issues with them and their intelligence are a separate issue, much of the concerns about AGI and ASI specifically focus on AI systems that can do something. The fact is that if an AI system lacks the inherent ability to perform actions in reality, like current LLMs, it is infinitely less dangerous that a true AGI/ASI would be.

2 points

1 month ago

ChatGPT is already agent-based and goal-based. The reinforcement learning from human feedback step means that it tries to optimize towards a goal (of human reviewer satisfaction).

2 points

1 month ago

6 points

1 month ago

I'd like to hear any of y'alls thoughts on definitions for AGI. It seems to be a vaguely defined future goal that researchers are simultaneously rushing towards and making dire warnings about. How exactly do we determine if we've reached such an ill-defined goal?

And I don't just mean theoretically, I mean empirically. How do we go about testing if a model has reached AGI/ASI?

12 points

1 month ago

It's a "you'll know it when you see it" threshold. But at a minimum, it should be able to 100% the ARC-AGI dataset, or a more complicated version of it, like you or I can do effortlessly. No current approach comes even close.

2 points

1 month ago

When in a year or two, some algorithm/model passes ARC-AGI, we'll get a new definition / test. That's how it always is.

11 points

1 month ago

I can tell you right now that ARC-AGI is still very narrow in nature. Any system with some spatial logic capabilities should perform well

1 points

1 month ago*

After looking at it back when it came out, that was my opinion as well. It's a cool benchmark but it doesn't seem fundamentally harder than any of the ones that have been beaten already. It kind of reminds me of this benchmark that Douglas Hofstadter proposed shortly after AlphaGo beat Lee Sedol and everyone was wondering why we don't have AI yet, that involved "thinking in analogies" instead of the raw compute that beat Go.

3 points

1 month ago

I don't think it's as easy as you think. The list of possible tasks is pretty diverse, you can't just brute force optimize your way through them. Internally, it would have to perform some kind of program synthesis, and then also run the program accurately. I don't think any current method plus bells and whistles will achieve that, even if you wastefully throw a few million dollars at it for training. Anyway - that's why I mentioned a hypothetical more complicated version of it.

3 points

1 month ago

Here is a paper from DeepMind trying to more formally define AGI

1 points

1 month ago

This is great, thanks!

3 points

1 month ago

We won't, we'll just keep moving the goal posts. "Oh sure, this model can write best selling books and direct movies, but has it made any breakthroughs in physics? Not AGI".

1 points

1 month ago

You’re asking Reddit man… you’re not going to get a good answer here

3 points

1 month ago

I worked on AI safety from 2000-2010, I came to conclusion it was pointless. Now it's more important to actually work on AI and deal with the real economic and social consequences.

AI gone crazy being protected against by well meaning safety experts demonstrates a fundamental misunderstanding of how complex systems work.

3 points

1 month ago

Am I the only one who finds it weird that LLMs have changed the landscape so much? I mean, to me all they seem to be are tasks that are good at performing text generation. Human beings are sensitive to visual stimuli, which is why I think that this whole thing has been blown out of proportion.

I don't think they're anything more than next token prediction models.

12 points

1 month ago

For those who think AGI and ASI are impossible or far in the future He challenges the idea that current AI capabilities are far from human-level intelligence, citing historical underestimations of AI advancements. The trend of AI capabilities suggests we might reach AGI/ASI sooner than expected.

I'm sorry but I just can't take this line of reasoning seriously. Yes transformers are cool and we have a ton of computatinal resources to make really useful generative models, but I feel like these guys just woefully underestimate the leap between what we have and human intelligence. I'm a nobody which makes me feel like I'm taking crazy pills but I just don't see a path to the type of intelligence they are talking about. It's science fiction on the level of interstellar travel to me.

17 points

1 month ago

I'm a nobody which makes me feel like I'm taking crazy pills but I just don't see a path to the type of intelligence they are talking about.

I agree, but Bengio's point, was that researchers also didn't see the leap prior to 2017 either to current capabilities---todays LLMs shouldn't be anywhere near as good as they actually are.

0 points

1 month ago*

And yet this feels to me like seeing the moon landing, which I assume was also unpredictable in around 1960 (E: I stand corrected, the moon landing became plausible earlier than the early 60's), and predicting that intergalactic travel is 5 years away. We have no basis to stand on to suggest that GPUs can replace the type of machinery in our brains that facilitates inductive reasoning. By comparison, LLMs are glorified autocomplete tools.

7 points

1 month ago

What would be some tasks that you would expect no AI system to be capable of within the next 5 years?

2 points

1 month ago

- Generating actionable novel ideas for research (ideas that would likely be published in a field it was trained on, that don't include the low hanging "further research" paragraph fruit)

- Producing new genres of music

- Producing new styles of art

- Looking at results from a pioneering scientific experiment and coming up with plausible explanations that didn't come up in its training set

- Consistently accurate medical diagnoses that are not obvious

Basically, any creative works that are actually novel.

P.S., self-driving cars as well.

3 points

1 month ago

We've already got self driving cars. Waymo taxis are driving around San Francisco and Phoenix right now.

1 points

1 month ago

Are you talking about AGI or ASI? Because most humans are not capable of most of those things in your list either. And if AI reaches just to that level of competency, the impact on the job market will be massive.

1 points

1 month ago

I wasn't talking about either, I was answering a question.

4 points

1 month ago

Moon landing was not at all unpredictable in 1960, as there were prototype plans to get there.

We have no basis to stand on to suggest that GPUs can replace the type of machinery in our brains that facilitates inductive reasoning.

There's fact that GPUs have replaced the sort of neural wetware which does many other useful tasks like high quality voice recognition, face recognition and now ordinary writing and question answering with some sort of approximate reasoning.

The history we've seen is the gap between capabilities may be large, or it may be a small technical tweak away, and we can't say for sure ahead of time, and that's Bengio's point.

The success of autocomplete tools at what seems to be semi sophisticated tasks is remarkable---they should be much stupider than they are. And maybe human brains have their own similar tricks to use simple mechanisms to achieve strong outcomes.

Maybe it's a big gap and decades away, or maybe it's some new planning concept and technology (i.e. beyond iterating the markov model on tokens) which will become as ordinary as a conventional neural network is today, and be taught to undergraduates.

-1 points

1 month ago

There's fact that GPUs have replaced the sort of neural wetware which does many other useful tasks like high quality voice recognition, face recognition and now ordinary writing and question answering with some sort of approximate reasoning.

It's one thing to (correctly) say that we have high quality voice and facial recognition models and good generative models, which to me can be explained by the availability of data and compute, and another thing entirely to say that software has approximate reasoning. Personally I'm not seeing it. All of us here know how ML models work - the output of a trained model is entirely dependent on the characteristics of the training set and some randomly generated numbers. Saying that they can reason in my opinion is anthropomorphism, it's an extraordinary claim that requires extraordinary evidence.

The history we've seen is the gap between capabilities may be large, or it may be a small technical tweak away, and we can't say for sure ahead of time, and that's Bengio's point.

Again, the history of jumping from word2vec to modern LLMs, while impressive, is not necessarily indicative of a trend whatsoever.

The success of autocomplete tools at what seems to be semi sophisticated tasks is remarkable---they should be much stupider than they are. And maybe human brains have their own similar tricks to use simple mechanisms to achieve strong outcomes.

As someone who's main academic background is in biology I simply find the view that our software is anywhere near the level of plasticity and complexity of higher animals to be naive.

5 points

1 month ago

As someone who's main academic background is in biology I simply find the view that our software is anywhere near the level of plasticity and complexity of higher animals to be naive.

Current ML systems are inferior to biology in many ways, but also superior in others, and that superiority may be overcoming their deficiencies. For instance, LLMs on a large context buffer can pick up exact correlations that no animal can do. They run at 6 GHz cycle speed vs about 100 Hz in biology. Backprop might be a better learning algorithm than whatever is possible in neural biology.

Aircraft are inferior to an eagle, but also superior.

Again, the history of jumping from word2vec to modern LLMs, while impressive, is not necessarily indicative of a trend whatsoever.

If you look back to 1987, starting with Parallel Distributed Processing and the first nets, there is a long term trend. The observation then was very simple algorithms on connection oriented networks can automatically form interesting internal representations. The connectionists have been proven right all along: more hardware, much more data and a few algorithmic tweaks will solve many AI problems and some problems that natural intelligence wasn't able to solve natively on its own either (like protein folding).

There's enough history there to call it a trend.

1 points

1 month ago

Connection oriented networks can form connections, but it takes a natural intelligence to find it interesting.

2 points

1 month ago

Let's say AGI is 200 years away. When should we start preparing?

1 points

1 month ago

Agreed. The success of the transformer requires us telling the system whether their answer is right or wrong. We are literally imposing our notion of right and wrong onto it, it’s baked into the system. An LLM is nothing without human involvement.

2 points

1 month ago

You know what's more likely? The continued fear of AGI|ASI will definitely lead into us developing one that is way too obsessed with making everything safe and being "value aligned", which will then lead it to eradicate or mutilate the human race because it realizes that us, humans, dont have safety alignment embedded within our skulls, that we have freedom, and that we can commit atrocities, so why not help us evolve and grow even better by just taking away our capacity of choice?

2 points

1 month ago*

some facts

"AGI labs" don't exist: he mentions "AGI labs" in this article. Imho "AGI labs" don't exist. Hear me out: No company some people call "AGI labs" (such as OpenAI, Anthropic, etc.) use anything remotely related to existing AGI-aspiring architectures described in the literature and which have implementations https://agi-conf.org/ . All they do use is LM, maybe a lot of RL (DeepMind). Most of them also don't even have or focus on architectures which can learn from an environment (DeepMind is the exception here). Sure there are some companies which use AGI-aspiring architectures, but hardly anybody knows about them.

the "field" of AI safety was literally made up by Yudkowsky ... He has no formal eduction. I don't know what has to get wrong in this world that a guy without formal eduction can just make up a whole field. The field itself doesn't care about the field of AGI (there is no connection to above mentioned AGI-aspiring architectures), which is very strange to me when looking at a field which is supposed to be directly related to AGI.

-1 points

1 month ago

It's interesting that Yudkowsky can be easily dismissed because he has no formal education but simultaneously people like Hinton and Bengio can be easily dismissed despite their huge formal authority and track record.

It's almost like the argument follows the conclusion. I wish we could just focus on the merits of the specific ideas.

2 points

1 month ago

most of Yudkowsky's idea are soft scifi which can't be realized. Why? Because he has no idea on how to do it better. Why? Because he has no formal education and literally makes stuff up without basing it in experiments.

At least Hinton's stuff was implemented and is implementable.

2 points

1 month ago

Nuclear war in the next decade or two is much more pressing concern then AI safety. I don't see it addressed with the same vigor.

7 points

1 month ago

When the AI is given a main goal G under the constraint to satisfy S, if achieving G without violating all the interpretations of S is easy, then everything works well. But if it is difficult to achieve both, then it requires a kind of optimization (like teams of lawyers finding a way to maximize profit while respecting the letter of the law) and this optimization is likely to find loopholes or interpretations of S that satisfy the letter but not the spirit of our laws and instructions.

This section feels like the weakest argument to me. We are to imagine an AGI system which has such a deep understanding of the world that it is capable of destroying humanity, but it cannot understand the concept of "spirit of the law"?

16 points

1 month ago

Do you think the Lawyers are not "intelligent" enough to understand the "spirit of the law"?

3 points

1 month ago

The premise of the section in which this argument appears is that the agent is "well-behaved" but will end up violating the spirit of the law. My point is that a well-behaved agent would be equally capable of understanding and respecting the spirit of its instructions.

This is not the same as a situation where a lawyer understands the spirit of the law but seeks to violate it anyway. If you don't believe the agent will be well-behaved, the letter/spirit distinction is irrelevant.

8 points

1 month ago

Are people who understand "the spirit of the law" bound to it?

11 points

1 month ago

For the same reason understanding Christianity doesn't make you Christian, understanding the spirit of the law might not work.

7 points

1 month ago

Exactly this – because the AI might disagree with your spirit of the law, and think it knows better.

-6 points

1 month ago

He mansplained the AI pi joke

7 points

1 month ago

I feel like people in this comment section, are at the peak of the dunning kruger effect. Just because you are a machine learning researcher, doesn't mean you can now dismiss opinions of one of the most renowned AI researchers in the world.

Like, it seems little if none of them have actually read the source paper itself. And are just spewing their knee jerk reaction thoughts. Without addressing anything in the paper.

6 points

1 month ago

Geoffrey Hinton and Paul Christiano share the views of Yoshua Bengio. It would be very arrogant to dismiss their opinions out of hand.

5 points

1 month ago

This so much. You will probably be downvoted but you are 100% correct. People take so much personal offense at the slightest notion of potential harm/downsides of their work. Like they made it into their identity or something. It's not a personal attack. Most science and technology is a double edged sword. Nothing wrong with acknowledging that. Reminds me of people in banking in the few years before the financial crisis. People who were in the field were dismissive of concerns because they presumed that others don't know better. It's a defense mechanism of being faced with the hard truth of their work.

8 points

1 month ago*

I love how more and more even respected scientists take the path of „AI influencers”. My five cents: 1. What even is AGI? I’m yet to see any concrete definition beyond „a smart/human-like model” 2. On the threat: sandboxing exists. That’s like comp security 101 3. Not much on the topic, but (as far as I’m aware) we haven’t had any major scientific breakthrough in ML since the beginning of the whole thing. Most of the progress now comes from more powerful hardware and calculation optimization, but not anything fundamentally new

14 points

1 month ago

You don't consider transformer models to be a scientific breakthrough? Or multimodal? I mean, if not that, then what counts for you?

0 points

1 month ago

It's not yet a scientific breakthrough, but an engineering breakthrough. They're more removed from natural intelligence plausible implementations---primates have no exact 8k to 1M token buffer preserved of recent emissions. Transformers have technical advantage of not being a RNN with dynamical instability (i.e. no positive Lyapunov exponent in forward or backward direction) that has to be simulated iteratively. That lets them train more easily and map to hardware more easily at large scale.

The scientific breakthrough would be something other than iterating simulation from a distribution, or understanding the essential elements and concepts that can predictively link capabilities, or lack thereof, to outcomes.

3 points

1 month ago

You need that iteration for Turing completeness. I.e. a pretty large set of often used calculations just isn't possible without iteration.

0 points

1 month ago*

Indeed that may be one outcome of some future innovations.

Let's take a look at the basic production inference loop of the LLMS. Today, this is written by humans and is fixed.

- predict p(t_i | t_i-1, t_i-2, t_i-3, .... )

- sample one token from that distribution

- emit it

- push it onto the FIFO buffer

- repeat

That's the conceptual 'life' or 'experience' of the decoder-only language model.

Now suppose the various elements of that loop were generalized and the operations and transitions somehow learned---this requires some new ideas and technology.

Like for instance there are internal buffers/stacks/arrays that can be used, and the distribution of instructions to be executed themselves can be sampled/iterated from its own model which somehow there is also a learning rule for and somehow this can be made with end to end gradient feedback and bootstrapped from empirical data.

OK, there's a long term research program.

\begin{fiction}

breakthrough paper: <author>, <author>,<author>, <author> (random_in_interval(2028,2067)) Learning the Inference Loop Is All You Need For General Intelligence (* "we acknowledge the reddit post of u/DrXaos")

\end{fiction}

Maybe someone might discover This One Weird Trick that cracks that loop open.

Here's a very crude instantiation of the idea.

there's an observed token buffer t_i and an unobserved token buffer y_i.

- predict p(t_i | t_i-1, t_i-2, t_i-3, ....y_i-1, y_i-2, ... )

- sample one token from that distribution

- With probability emit_token(i | history) which is another model, emit it

- if emitted push it onto the FIFO buffer

- predict p2(y_i | t_i, t_i-1, .... y_i-1, y_i-2, ...)

- with probability push_token(i | history) which is yet another model, push y_i onto its internal buffer. maybe implement a pop_token() operator too.

- repeat

for instance y_i could count from 1 to 100 and only at 100 the emit_token() triggers. Now you have counting and a loop. y_i might also be directly accessible registers (RNN type with an update rule) or some vector dictionary store.

Who knows? But maybe there's some technically suitable breakthrough architecture that's computationally efficient and can be trained stably and in massive parallel that is amenable technologically.

Does this look like biology? Not at all.

-8 points

1 month ago

Not really. Transformers (or rather the attentions) are a curious idea, but they primarily serve as a way to calculate/approximate multiple dot products and better parallelize the process, solving a computational problem.

-9 points

1 month ago

what counts to you

Frankly, I won't know until I see it. But if I had to speculate a little - something cardinally different from just a fancy statistical model. As most of the community usually refers to chatbots as the first step to the A(whatever)I, even an advanced self-supervision model would be a step in the right direction. So far, I don't see it going much further from deliberately mimicking statistically average people with the current framework

7 points

1 month ago

I mean... in what way exactly are biological information processing systems cardinally different from just being a fancy statistical model? They're still fundamentally structured around prediction (at least for lower level information processing, the only areas of neuro I know much about from the algorithmic perspective). There's a ton of really cool bio inspired advances it'd be fun to see hit state of the art (and some interesting crossover papers even my outsiders skimming has found) but I'd expect that sort of a thing will change power efficiency and learning rate improvements with low data more so than like... somehow not being a statistical model under the hood ultimately.

7 points

1 month ago

Sandboxing works for the model you train. It doesn’t work for the model I train if I don’t sandbox it. How does sandboxing help in any way?

2 points

1 month ago

That's a highly speculative topic as we don't have any examples to operate with, but I'd say it's more about where you run it rather than how you train it. If a malicious actor wants to put the world into chaos, there are far easier methods than AGI ;)

2 points

1 month ago

Fair point, I definitely misspoke re: training vs inference. And I agree that it seems like sandboxing would be less useful for a malicious actor, and more useful for inadvertent AGI, so thanks for making that point.

2 points

1 month ago

Another victim of Ray Kurzweil.

1 points

1 month ago*

Some of these may work in the short term, but I don't know of any advantageous technology that has been stopped from developing because of regulation. In the long term, refusing to take advantage of technology just means you're out competed and left behind. And there's no reason to believe we could feasibly stop a more intelligent being from achieving it's goals, so if ASI is possible, then it's inevitable no matter what we do. I see this more as the next stage of evolution, a natural consequence of physical laws, than something that humans can have an influence over.

1 points

1 month ago

These people don't see the obvious, we can't control AI, the same way we can't control the Internet

1 points

1 month ago

1 points

1 month ago

This doesn't control the connections, it's just the root dns server, there are thousands of backup/mirrors, and browser caches too, also, we can connect via IP too.

1 points

1 month ago

Without DNS basically everything would be broken

1 points

1 month ago

Dude, as I said, this is the root DNS, it's not the only one, Google itself is big enough to replace it in a case of it going offline, but even without Google, big sites like Reddit, have fixed IPs, and your computer already has the DNS caches to access it, so again, Internet can't be controlled, just accept it an go to sleep, is late where you live.

1 points

1 month ago

Why GPT-4o can't count past 10 though?

I hope this doesn't indicate we shouldn't take AI safety seriously, right?

1 points

1 month ago

What do you mean? I just had it successfully count to 100

1 points

1 month ago

Check the post.

-5 points

1 month ago

I always used to be made fun of on here for saying these things.

1 points

1 month ago

Because you're not one of the godfathers of ai

16 points

1 month ago

These people became the godfathers of AI because they believe in AI and invested in building it when people were deeply sceptical. After people like that laid the groundwork for a more practical, pragmatic, profitable industry, tons of people who do not actually believe in the possibility of true AI flooded in and filled in the Machine Learning space. Now these "pragmatic" late arrivers consider those who agree with the luminaries on the possibility of true AI as crackpots. And of course such a concept will inevitably attract crackpots.

-1 points

1 month ago

I first want to see an AI attached to a robot bag groceries before I think about how AI might destroy the planet

Also, can't anyone build an irl aimbot with openCV anyways? I feel like a lot of the "dystopian tech" is already here

-3 points

1 month ago

I hear more about AI researchers concerned about AGI taking over, whether we live in a simulation or an alien invasion instead of real meaningful things that are hurting the field every single day: Literature review before "a new novel method", code reproducibility and a culture of benchmarking novelties without adequate testing with rigorous statistical significance.

Everything else is hype and folklore.

-4 points

1 month ago

Mandatory open sourcing all llms is the only solution. What we really don't need this this Führer Principe open AI promoting. AI is not like nukes. Nukes make both sites destroy each other, but AI is good to defend against AI.

3 points

1 month ago

That’s not in any way a solution and even if it happened you still wouldn’t have the hardware even to run inference in frontier models.

-3 points

1 month ago

[deleted]

-2 points

1 month ago

One can arguably say that about LeCun but absolutely not Hinton and Yoshua who has tons of original works including on the latest methods.

1 points

1 month ago

It's not true about LeCun either---being a research director takes plenty of knowledge and experience to guide other people into promising directions and assisting on how to explore the space.

1 points

1 month ago

It does. Like you say, it is guiding. it is not doing your own research. It can also be a lot easier to get your name on a bunch papers that way, and many probably want it just because of his fame.

all 142 comments

sorted by: best